tensorflow - object detection Training becomes slower in time. Uses more CPU than GPU as the training progresses - Stack Overflow

GitHub - moritzhambach/CPU-vs-GPU-benchmark-on-MNIST: compare training duration of CNN with CPU (i7 8550U) vs GPU (mx150) with CUDA depending on batch size

TensorFlow Performance with 1-4 GPUs -- RTX Titan, 2080Ti, 2080, 2070, GTX 1660Ti, 1070, 1080Ti, and Titan V | Puget Systems

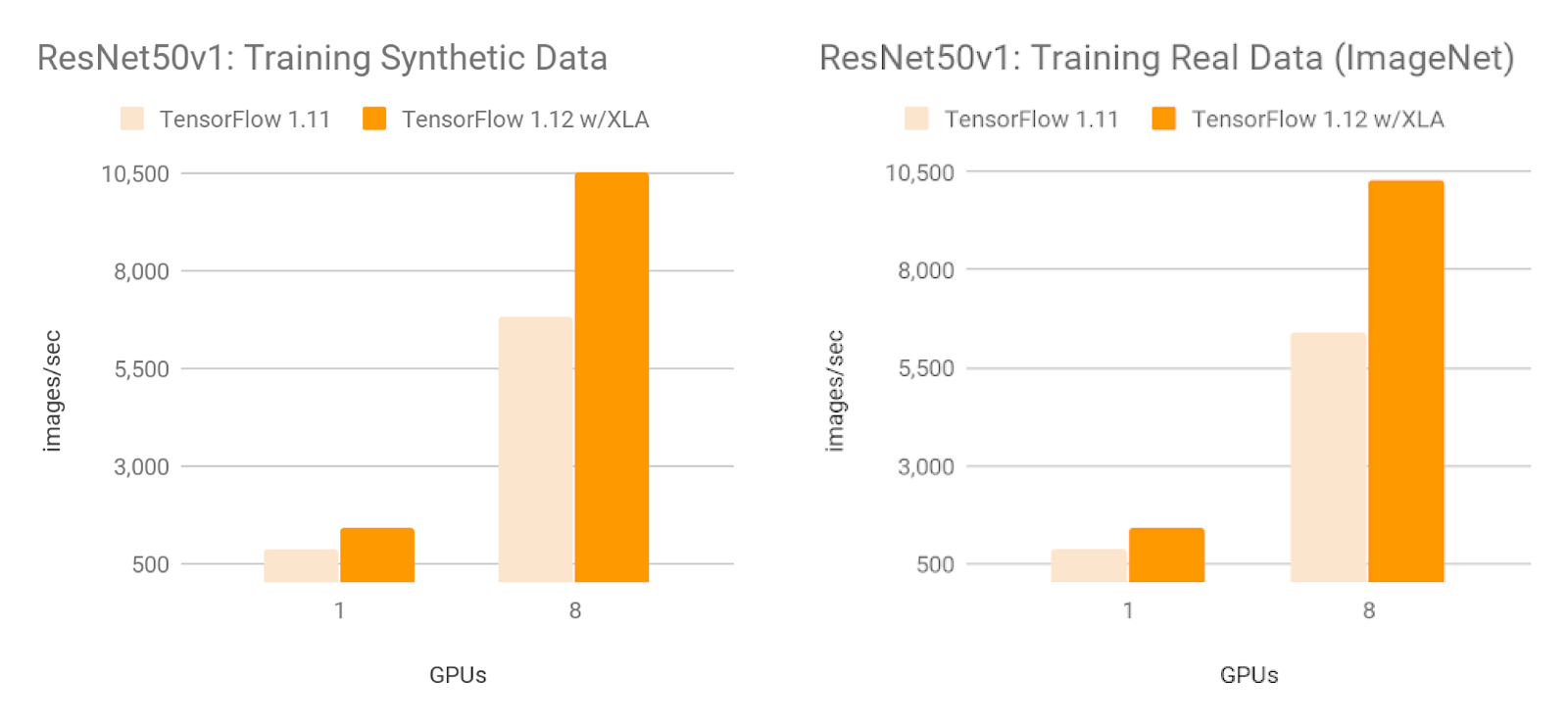

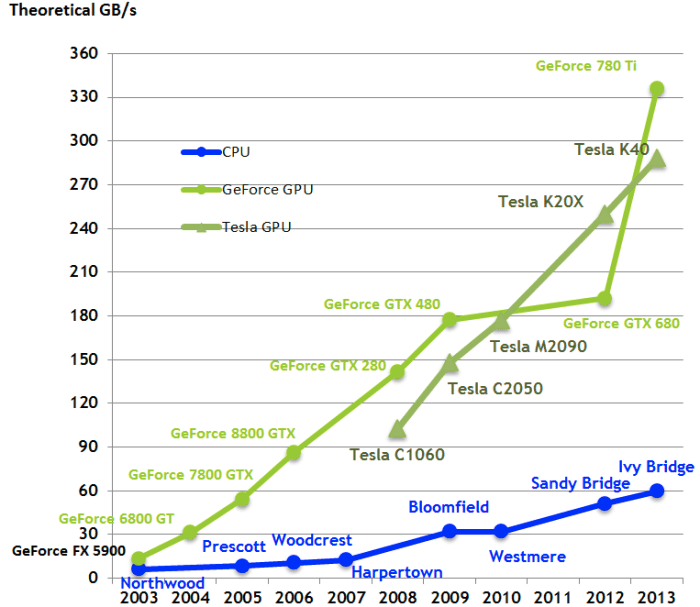

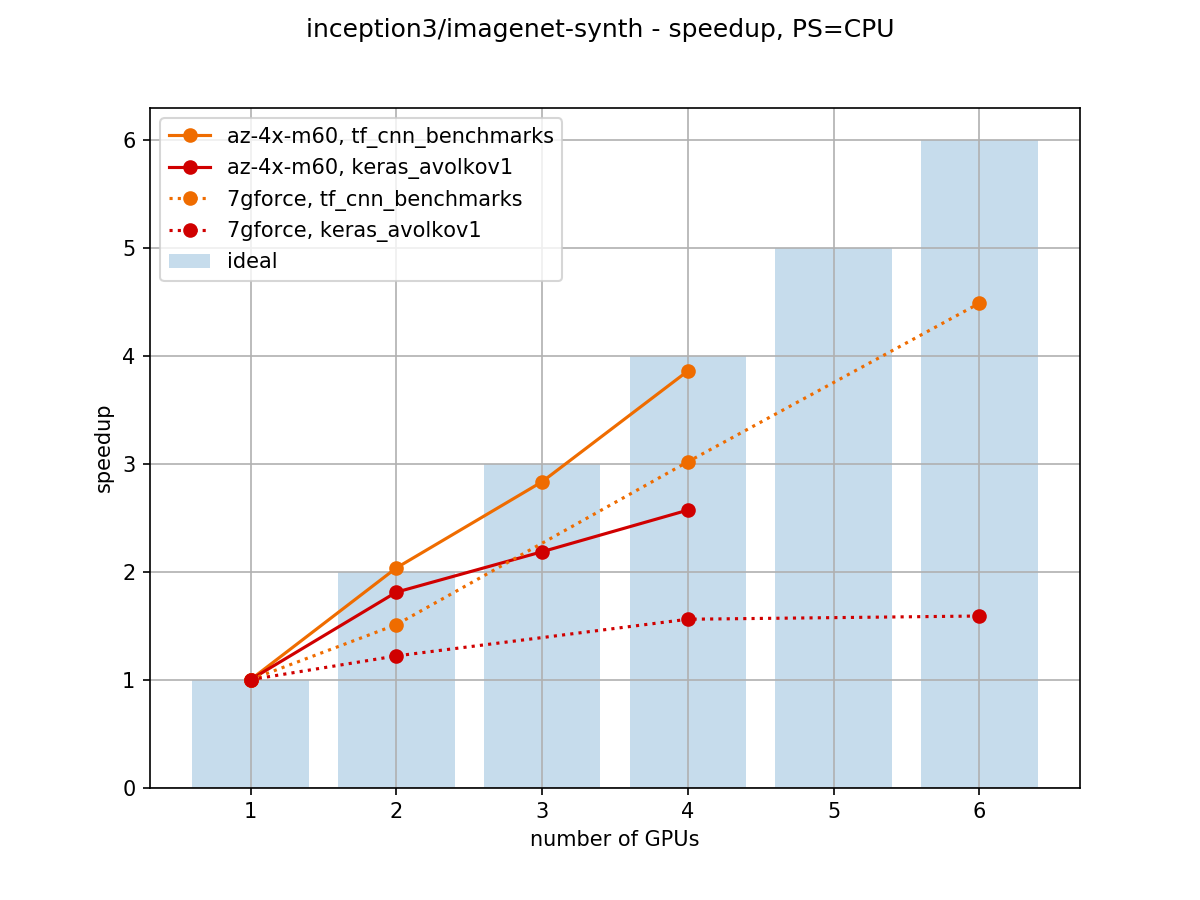

Towards Efficient Multi-GPU Training in Keras with TensorFlow | by Bohumír Zámečník | Rossum | Medium

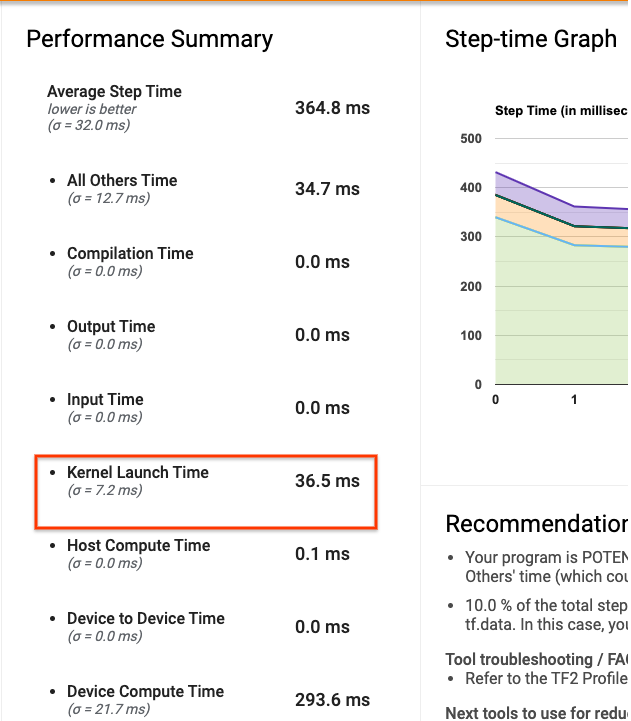

keras with tensorflow backend is 4x slower than normal keras on GPU machines · Issue #38689 · tensorflow/tensorflow · GitHub

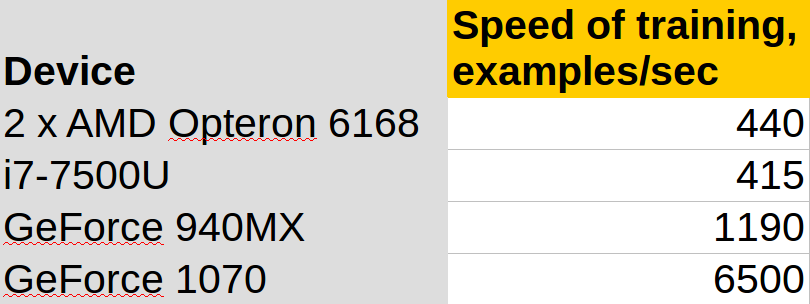

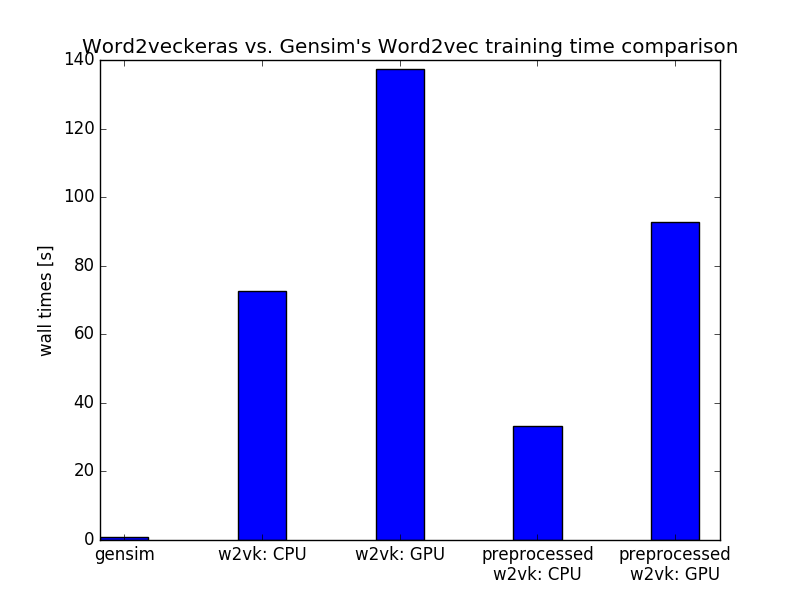

Gensim word2vec on CPU faster than Word2veckeras on GPU (Incubator Student Blog) | RARE Technologies

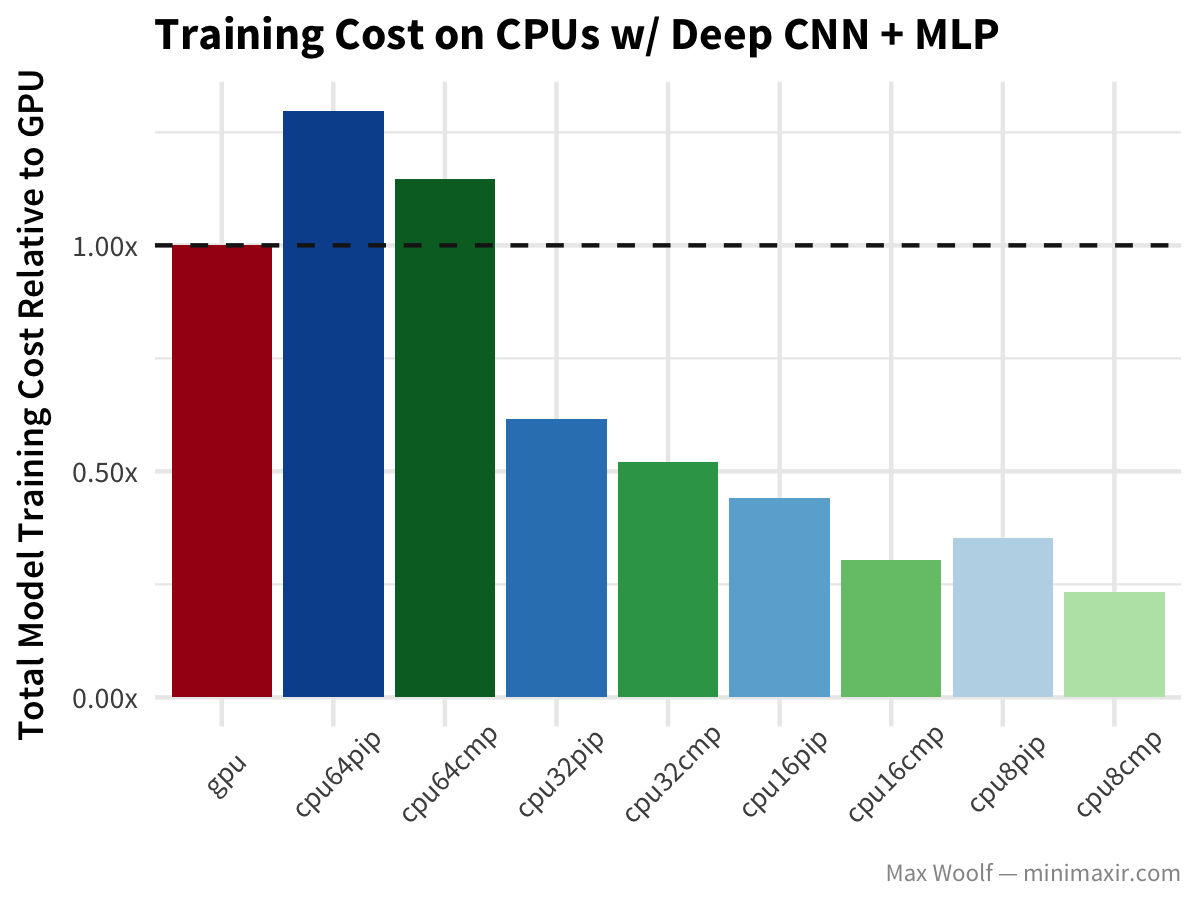

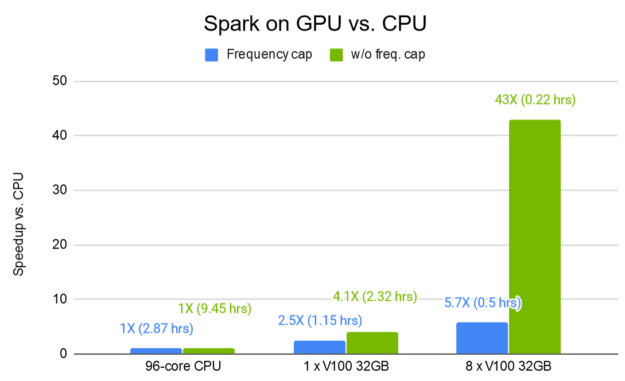

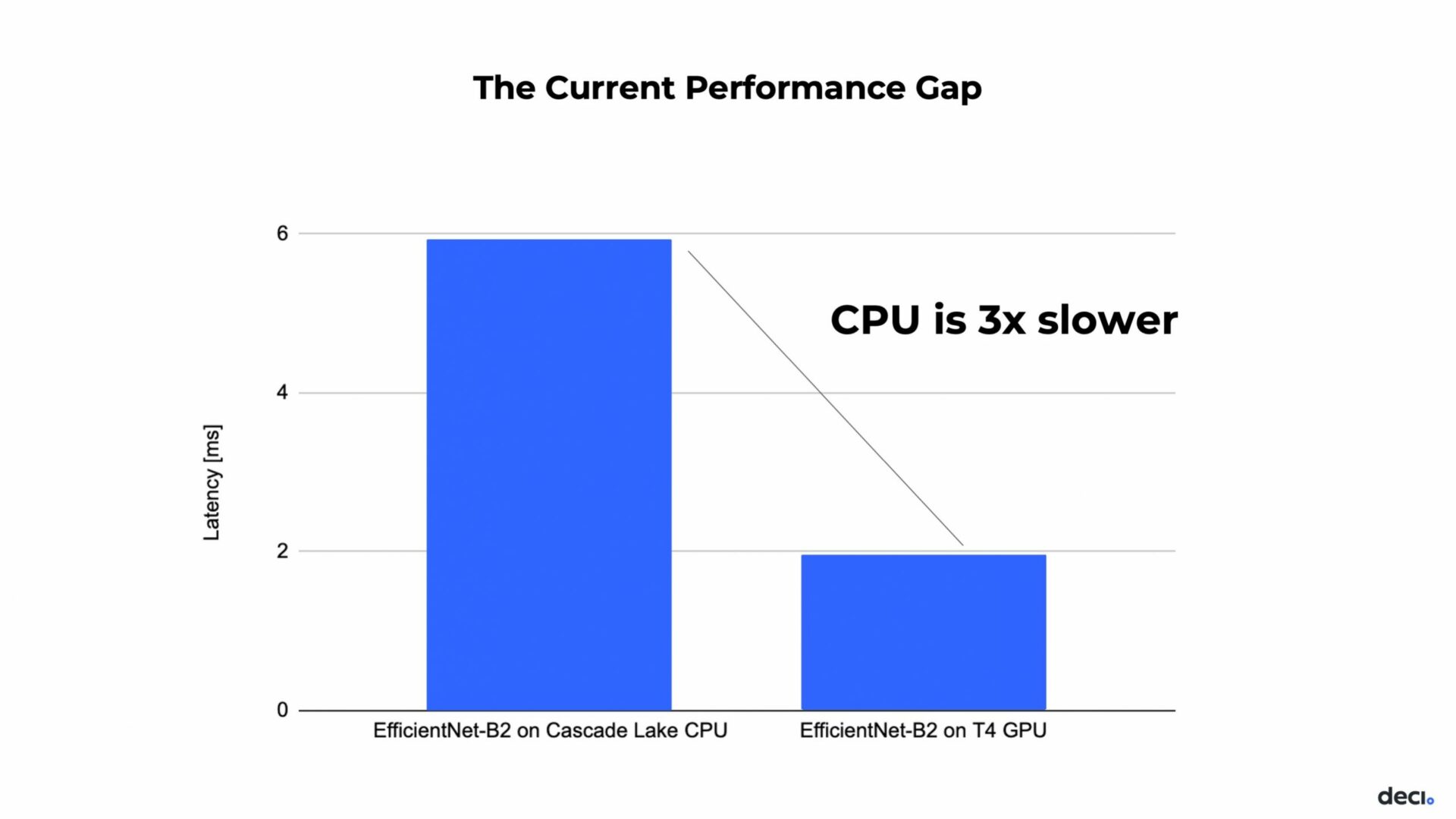

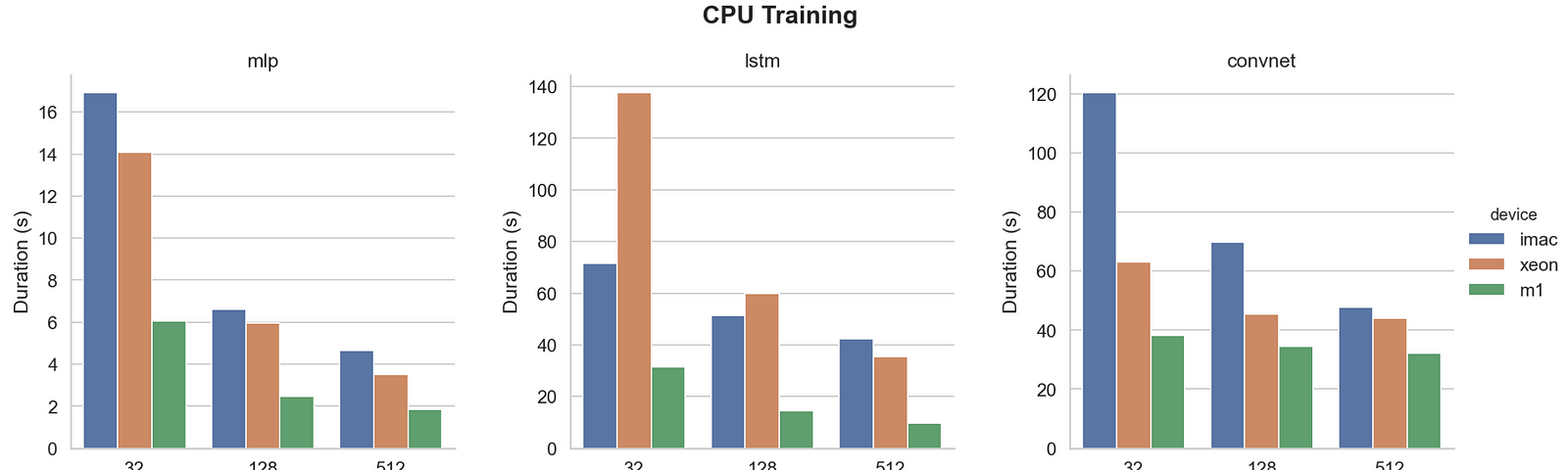

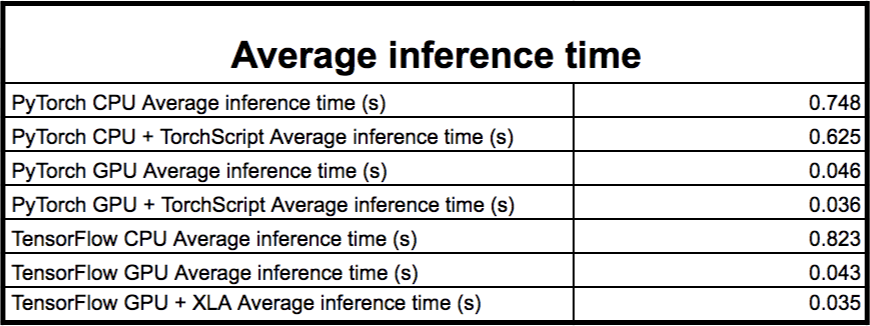

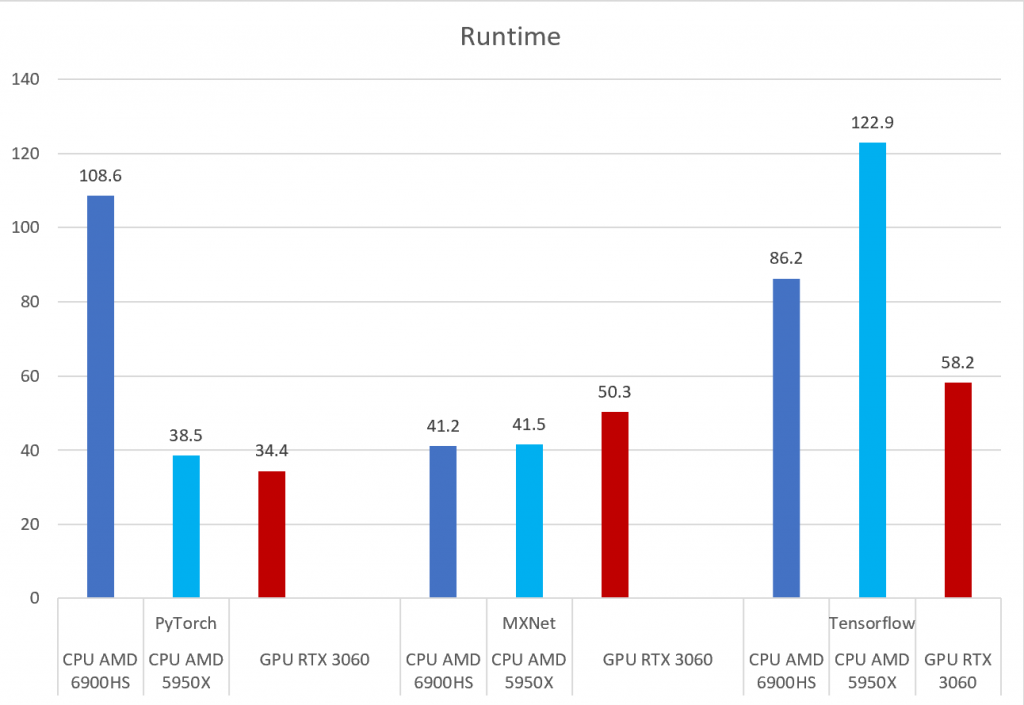

PyTorch, Tensorflow, and MXNet on GPU in the same environment and GPU vs CPU performance – Syllepsis